La Data Science, or Data Science, is a relatively new science, in fact it has been around for about fifty years. It arises from the need to put order in a very lively and rapidly evolving context. The growth in the volume of data, the possibility and ability to give meaning to data, have made the Data Science.

Historically speaking, data has often been treated as a kind of secondary product of any process. Anyone over the centuries has undertaken to collect data, has done so mainly for their own convenience, often without imagining that today an economic value could be attributed to a collection of data. If we think, for example, of a farm that over the years may have collected information on crops, events, sowing, etc., perhaps it could have done so to archive its corporate history. If all farms had done that method, then fertilizer companies today could benefit from it for research purposes, or for marketing purposes.

The one who deals with Data Science, he's called data scientist: currently one of the most sought after professionals in the world of work.

The task of the data scientist is to analyze data in order to identify models within them, that is, what I express the data available through the trend. The identification of these models is functional to the client's purposes: company, public body etc ...

In recent years, a data marketing model has increasingly established itself where someone is interested in selling data and someone else in buying it.

Companies specialized in the production of data were born, and companies specialized in buying and selling after appropriate cleaning and reprocessing operations. If we then think about the privacy regulations, we realize the complexity of the subject. Today there are strict laws that call for a conscious and respectful use of information.

A project by Data Science usually consists of the following steps:

In every single step the data scientist interacts with specific company departments, and therefore we can say that the data scientist is perfectly integrated into the corporate reality.

With technological advancement, the data scientist he has often found himself facing problems of Big Data and Artificial Intelligence.

When we talk about Big Data we refer to data that contains a great variety, arriving in increasing volumes and with greater speed. This concept is also known as the rule of the three Vs, which consists in the choice of three terms that characterize the Big Data phenomenon in its essential features:

In reality, other peculiarities have also been added over time, such as the truthfulness of the data to identify the reliability and reliability of the data.

Large volume of data arriving at great speed, and characterized by great variety, necessarily lead to data organization problems.

Welcoming them and then processing them? Structuring them and then processing them?

Several paradigms of organization of data systems were born, which have established themselves over time:

Currently these are the most widely used paradigms, and in many cases the solution of integration prevails, ie different projects could use different accumulation methodologies and then integrate themselves at a later time. There could be situations in which different data are collected with different paradigms, or different collections could constitute contiguous phases of the same life cycle.

Despite their great usefulness, we know very well that processing machines or computers are stupid. That is, a computer can do nothing if it is not the human being to analyze a problem, formulate an algorithm and encode it in a program.

This has always been the case, until we started talking about Artificial intelligence. In fact, artificial intelligence consists in inducing a kind of spontaneous reasoning in the machine, which can lead it to solve problems independently, that is, without direct human guidance.

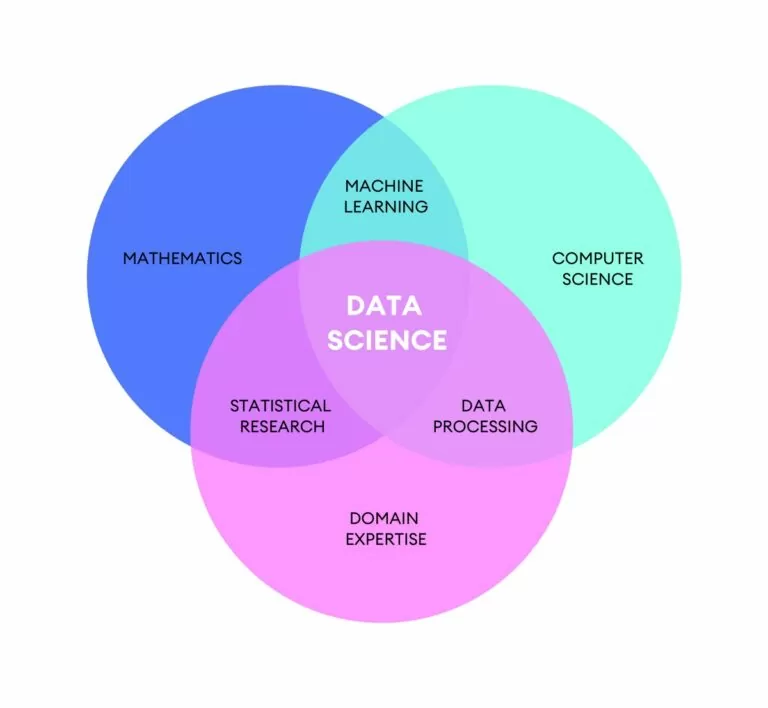

It took several years before the expression "induce a kind of spontaneous reasoning in the machine“, That is, it took several years before we passed from a condition of total“ forced ”instruction of the machine, to a condition of self-learning. In other words, the machine has been able to self-learn, to learn. We have therefore arrived at Machine Learning.

Machine Learning is a branch of Artificial Intelligence in which the programmer drives the machine in a training phase based on the study of historical data. At the end of this training phase, a model is produced that can be applied in solving problems, explained with new data.

I respect the classic approach, where the data scientist used to work for definish solution algorithms, the machine will discover what makes up the model. The Data Scientist must take care of organizing increasingly effective training phases, with richer and more significant data, and of verifying the validity of the models produced by subjecting them to tests.

Thanks to Machine Learning, the systems we use in mobile devices, internet, home automation are (or seem) more and more intelligent. A system, as it works, may also be able to collect data on it and on the users who use it, then use them in the training phase and then further improve the forecasts.

Ercole Palmeri: Innovation addicted

In the world of machine learning, both random forest and decision tree algorithms play a vital role in categorization and…

There are many tips and tricks for making great presentations. The objective of these rules is to improve the effectiveness, smoothness of…

"Protolabs Product Development Outlook" report released. Examine how new products are brought to market today.…

The term sustainability is now widely used to indicate programs, initiatives and actions aimed at preserving a particular resource.…

Any business operation produces a lot of data, even in different forms. Manually enter this data from an Excel sheet to…

The compromise of company emails increased more than double in the first three months of 2024 compared to the last quarter of…

The principle of interface segregation is one of the five SOLID principles of object-oriented design. A class should have…

Microsoft Excel is the reference tool for data analysis, because it offers many features for organizing data sets,…